¶ 2Leave a comment on paragraph 2 0

The collocate digital archive is today more familiar and arguably more relevant to the general public than the archaic archive with its attendant limitations. Following the traditional definition—“A place in which public records or other important historic documents are kept”1 or “A historical record or document so preserved”2 —the archive is an artifact of erudition. Documents are “kept”3 and “preserved” by the archivist, the “keeper of archives,”4 a “specially trained” individual with “broad deep knowledge about records” and “extensive research and analysis skills.”5

¶ 3Leave a comment on paragraph 3 0

While these descriptions retain some relevance (by no means does this paper intend to slight the importance of preservation, to dismiss the significance of the material artifact, or to disregard the necessity of expertise), they do not resonate within digital environments and they are inconsistent with the participatory practices that are transforming cultural norms, education, and scholarship. Today, everyoneis—or is potentially—an archivist. Hui has argued, “We are archivists, since we have to be. We don’t have choice. This decision is already made, or determined by the contemporary technological condition.”6 Web 2.0 tools such as Delicious, Facebook, Twitter, Instagram, and Pinterest have engaged us in the archivist-like behaviors of collecting, collating, describing, and disseminating. They have fostered curation and sharing of digital objects (photos, tweets, posts, news, etc.) into heterogeneous collections comprising a range of media types (image, text, video, audio, etc.), practices that not only underpin socialization and collaboration on the web, but also development of digital archives.

¶ 4Leave a comment on paragraph 4 0

Even as born-digital, user-generated, and other content is being curated and archived by the emerging neo-archivist public, the traditional archive is evolving. No longer is it exclusively material, static, and scholarly. Increasingly, it is digital, dynamic, and participatory. But despite significant advances, including those implied by the suggestive new collocate digital archive and by techno-cultural innovation, cultural heritage collections remain extant in wildly ranging conditions. Some are well cataloged and pristinely preserved; others are stacked in boxes and stored in attics. Some are locked behind closed doors and inaccessible; others are freely available on the web. And just as traditional archives can deter rather than invite public visitors, so too can digital archive interfaces. The archival community and its extended body of stakeholders (in library and information science and digital humanities) is responding to the fundamental and irrevocable7 shift being driven by digital technology, and groundbreaking work is being done to improve preservation and access.8 Yet, there remains much to discuss from a methodological perspective and considerably more to do. This discussion explores the emerging intersections between archives, social curation, and participatory culture, drawing on development of the Martha Berry Digital Archive (MBDA) as its primary example.

¶ 5Leave a comment on paragraph 5 0

Held within the climate-controlled rooms of the Berry College Archives, the Martha Berry (MB) Collection is not exactly stacked in boxes or stored in an attic. But it is large, comprising more than two hundred file boxes of manuscript and typescript documents. At the onset of the MBDA project, the collection was poorly indexed, had never been adequately studied, and, due to the slow fire effect that diminished paper quality, was in some instances literally crumbling on the shelves. It was on the verge of obsolescence, and the MBDA project team was challenged to identify an approach that would enable us to preserve and discover it. Our response: Crowdsourcing.

¶ 6Leave a comment on paragraph 6 0

Before I proceed, I should make clear that advances in technology notwithstanding, a large collection of unedited historical documents is not necessarily in want of a digital archive. Our decision to digitize the Martha Berry Collection stemmed from a longstanding interest in the early twentieth-century educator and philanthropist for which it is named, and in the social and historical events which, at least in part, define her:

¶ 7Leave a comment on paragraph 7 0

Martha Berry was born in 1866 to wealthy parents in the American South. She enjoyed a privileged upbringing and was likely expected, like many women of her era and position, to marry and to devote her life to family and social obligations. She chose instead to commit her inheritance to founding a school, and the majority of her adult life was dedicated to ensuring its growth and success.9

¶ 8Leave a comment on paragraph 8 0

To support the school, Berry established ties with influential friends and benefactors across the United States. The MB Collection documents these efforts, and it includes rich and varied personal and business correspondence written between 1902 and 1941. The collection offers a distinctly American perspective on international as well as continent-specific themes. These range from The Great War and women’s rights to educational reform and the Depression Era. Berry’s own life is somewhat enigmatic, and the mystery surrounding her decision to remain unmarried, her stance as a suffragette and feminist, and her longstanding relationship with Henry and Clara Ford, among other topics, lend elements of intrigue to her story. If we want to learn more about Martha Berry and the history preserved in the MB Collection, we must index the collection. As the following discussion infers, our methods for doing so are experimental, and we acknowledge that methodological innovation is as crucial to this project as our subject.

¶ 10Leave a comment on paragraph 10 0

MBDA took its methodological cue from pioneering research, which in the last ten years has detailed a series of fundamental shifts taking place in teaching, learning, and scholarly practices. In 2006, for example, Jenkins et al. published a white paper, “Confronting the Challenges of Participatory Culture: Media Education for the 21st Century.”10 Although focused primarily on connections between literacy, education, and the social and collaborative practices of teens, the report’s broader significance was unmistakable. “We are,” the authors observed, “moving away from a world in which some produce and many consume media, toward one in which everyone has a more active stake in the culture that is produced.”11 We are moving, in other words, toward a participatory culture.12

¶ 11Leave a comment on paragraph 11 0

In 2009, extending earlier findings on participatory culture, Davidson and Goldberg described an emergent model of participatory learning that “includes the many ways that learners (of any age) use new technologies to participate in virtual communities where they share ideas, comment on one another’s projects, and plan, design, implement, advance, or simply discuss their practices, goals, and ideas together.”13 According to Davidson and Goldberg, “Participatory learning begins from the premise that new technologies are changing how people of all ages learn, play, socialize, exercise judgment, and engage in civic life. Learning environments—peers, family, and social institutions (such as schools, community centers, libraries, museums, even the playground, and so on)—are changing as well.”14 University College London launched Transcribe Bentham in 2010. The project’s participatory transcription methodology received international attention,15 advancing the position that community contributions have a place in scholarly endeavors.

¶ 12Leave a comment on paragraph 12 0

When the MBDA project was initiated shortly thereafter, the term crowdsourcing was barely four years old and was already beginning to spread (in practice as well as in parlance) beyond its industry origins to touch even the most conservative realms of academia, including textual scholarship and archival studies. In June 2013, crowdsourcing made its Oxford English Dictionary (OED) Online debut as “one of the most recent 1% of entries recorded in OED” and among “50 entries first evidenced in the decade 2000.”16 Less than ten years after its coinage, the trend toward crowdsourcing and the underlying shift toward participatory culture are patently evident. Crowdsourcing methods have been adopted by museums, scientists, archives, educators, and others who recognize the convergence of academic and community spaces, behaviors, and goals, and the methods span disciplinary as well as national boundaries.17

¶ 14Leave a comment on paragraph 14 0

MBDA is a free and open digital archive. It requires no subscription. Its holdings are released under a Creative Commons license. Its content is community driven and dynamic. MBDA does not follow the conventional publishing model in which archival materials are first described and edited by archivists and scholars, then made available to researchers, students, and others. MBDA assimilates editing tasks within the digital archive interface, and editing and dissemination occur in coalescence rather than in sequence.

¶ 15Leave a comment on paragraph 15 0

To date, MBDA has disseminated over ten thousand documents. Nearly 50 percent of these contain complete document descriptions, and many more are in queue to be imaged, uploaded, published, and described. Although the archive is available for teaching, research, and other study and has been accessed thousands of times by visitors from across the United States and abroad,18 it not only remains in development but, because it intentionally blurs the line between publishing and editing, is designed to continue in this state indefinitely.

¶ 16Leave a comment on paragraph 16 0

Brabham has defined crowdsourcing as “an online, distributed problem solving and production model that leverages the collective intelligence of online communities for specific purposes.”19 He delineates four types of crowdsourcing, among these, Distributed Human Intelligence Tasking (numeration supplied):

¶ 17Leave a comment on paragraph 17 0The Distributed Human Intelligence Tasking (DHIT) crowdsourcingapproach concerns information management problems where (1) the organization has the information it needs in-hand but (2) needs that batch of information analyzed or processed by humans. (3) The organization takes the information, decomposes the batch into small “microtasks,” and (4) distributes the tasks to an online community willing to perform the work. This method is ideal for data analysis problems not suitable for efficient processing by computers.20

¶ 18Leave a comment on paragraph 18 0

Although Brabham’s definition of crowdsourcing can be problematically narrow,21 DHIT nonetheless offers an opportune methodological point of reference. The following thus details MBDA’s methodology as it corresponds with DHIT:

¶ 22Leave a comment on paragraph 22 0

(4) When users search or study MBDA, they are invited to assume the role of project partner by participating in editing microtasks.

¶ 23Leave a comment on paragraph 23 0

Consistent with archival best practices, MBDA follows the Dublin Core (DC) Metadata Initiative’s specifications for document description. Within the MB Collection, several collection-level attributes are held in common among all documents, so editing of seven of the fifteen elements in the DC metadata element set (source, publisher, rights, language, format, identifier, and coverage) can be automated,22 enabling us to complete an initial cataloging step. However, to depict document-level nuances and to facilitate search and sort, additional, document-exclusive descriptors are necessary. MBDA requires descriptions for six of the remaining seven DC elements (title, description, date, creator, subject, and type).

¶ 24Leave a comment on paragraph 24 0

Because the MB Collection is primarily epistolary, we are interested in the names of document recipients as well as authors; for that reason, we defined the item type correspondence and within it the element recipient. And because we need to solve next-stage editing challenges, we defined the element set crowdsourcing and within it the element flag for review (which enables participants to signal important documents, editing errors, and other document problems), and the element script type (which enables participants to distinguish manuscript from typescript documents, a detail important to later-stage transcription and OCR). Editing each of these elements relies on careful, individualized document review. Finally, because we were interested in informing understanding of correspondence with geographical data, we integrated geolocation into our element set. Thus, ten elements—six DC (title, description, date, creator, subject, and type) and four project-specific (recipient, flag for review, script type, and geolocation)—are subsumed by MBDA as editing microtasks.

¶ 25Leave a comment on paragraph 25 0

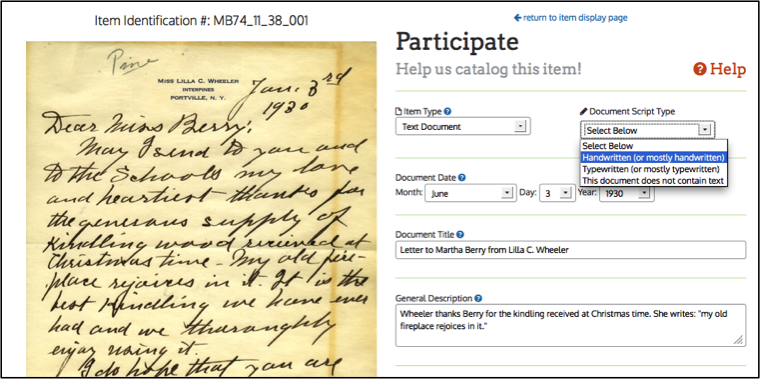

Underlying MBDA is a standards-based archival infrastructure comprising (at a foundational level) a customized, themed instance of Omeka and digital items with linked DC metadata. MBDA’s Crowd-Ed plugin, which enables participatory metadata editing and which manages user authentication, authorization, auditing, and recognition, was built using Omeka’s plugin API and was designed to create a correlation between each question on the public editing interface and either a DC metadata element or a project-specific descriptor (see Figures 1 and 2, below). DC element field designations on the public editing interface are masked for user-friendliness and familiarity (e.g. the DC element creator alias is author), obscuring on the front-end the back-end alignment with the underlying DC elements, and thereby freeing editors to focus on content rather than on a potentially obscure data model. DC as well as custom element metadata are stored in the archive database for long-term preservation and displayed immediately on the front-end for project staff and project-participant review and revision, search, and discovery.

¶ 26Leave a comment on paragraph 26 0Figure 1. Screen capture of the editing interface; the editing fields correspond with DC or MBDA specific elements as follows: Item Type: DC Type; Script Type: MBDA specific; Date: DC Date; Title: DC Title; Description: DC Description; Author: DC Creator (not shown); Recipient: MBDA specific (not shown).

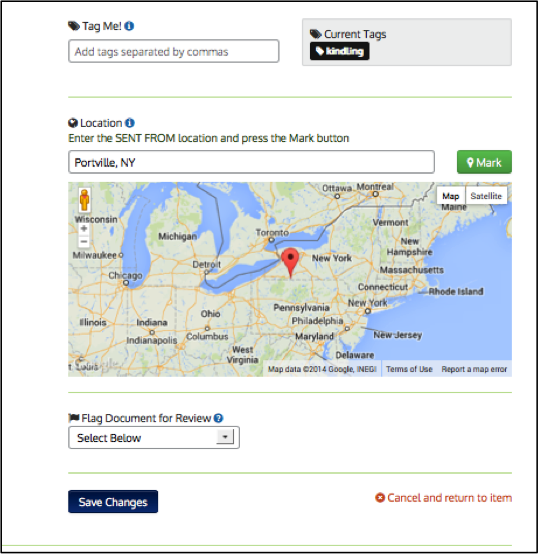

¶ 27Leave a comment on paragraph 27 0Figure 2. Screen capture of the lower section of the editing interface; the editing fields correspond with DC elements as follows: Tag: DC Subject; Location: MBDA specific; Flag for Review: MBDA specific.

¶ 28Leave a comment on paragraph 28 0

MBDA’s participatory approach extends the how of metadata capture, not the underlying structured and standards-based data model recognized across digital archive projects and platforms. Each digital item therefore remains portable and extensible, and the project utilizes an Open Archives Initiative Protocol for Metadata Harvestingplugin to expose and share items with other digital archives and libraries. MBDA was designed in compliance with Digital Library of Georgia (DLG) standards and is included as a collection-level record within DLG. Because DLG is a Digital Public Library of America (DPLA) partner, MBDA data, harvested by DLG,23 is available not only to users of DLG, but also to users of DPLA.

¶ 30Leave a comment on paragraph 30 0

One of the decisive challenges of a participatory project such as MBDA lies in its two-fold purpose: editing and publishing. These are not discrete, and MBDA’s experimental editing and publishing model exploits the critical intersections between the two. The project invites individuals to visit and use the archive and to edit documents while doing so because MBDA is augmented in richness of content and utility through this hybrid approach.

¶ 31Leave a comment on paragraph 31 0

To guide community contributions and to minimize inaccuracy, MBDA uses controlled vocabularies for elements such as script type and format and employs recognizable dropdown and form field interfaces for elements such as date, title, and description.24 In addition to entering metadata, participants can and do correct inaccuracies introduced by other editors, complete incomplete edits, add additional description, and flag documents for review. Until a project staff member reviews and locks items to prevent further editing, documents remain unlocked and open to editing by authenticated users.

¶ 32Leave a comment on paragraph 32 0

Yet even as our open, community-driven editing model addresses archival challenges, it introduces new ones—to accuracy in particular—and some mistakes must be anticipated. Cursive handwriting, for instance, widespread in use in the early decades of the twentieth century, is today taught in fewer and fewer schools, and readers are less and less practiced in deciphering script. Errors in transcription of cursive (and cursive itself as an impediment to transcription) therefore are expected. Indeed, among the reasons to flag an item for review on the editing pageis “I can’t read the handwriting.”

¶ 35Leave a comment on paragraph 35 0

Figures 3 and 4 above illustrate a community editor’s misinterpretation of lavender, with the transcription cucumber supplied in its place. Project staff and regular editors can often spot this kind of misanalysis, not because all are experts in early twentieth-century handwriting, but because of their familiarity with the themes and tropes common to the collection (for example, Berry often sent lavender and lavender sachets as gifts; it is also worth noting that the spelling lavender occurs in variation with lavendar in the correspondence). Although they edit such descriptions, it is realistic to expect, so long as items remain open for editing, and in some instances even after they are locked, gaps in legibility and interpretation to frustrate complete and accurate description.25

Just as online collaboration can introduce error, it can also reduce it,26 and as Martha Nell Smith has shown, exposure of errata can prove crucial to emendation.27 Editor of the Dickinson Electronic Archives, Smith has credited collaboration with correction of a transcription error in which she posited, “I’m waiting but the cow’s not back,” for a difficult line in a poem by Susan Dickinson (see Figure 5, below). The analysis was historically as well as textually motivated and, in an isolated, traditional, scholarly setting, sensible. Smith’s interpretation received acclaim from scholars who praised the connection made between the challenging line and its putative referent. Later, however, after a copy of the source manuscript was published online and Smith’s transcription collaboratively reviewed, she learned that her version was inaccurate, that the line was actually “I’m waiting but she comes not back.” The lesson, as Smith describes it, is clear: “Had editing of Writings of Susan Dickinson remained a conventional enterprise, the error of what I had deemed and what others had received as fact might have remained inscribed in literary history for years.”28

¶ 36Leave a comment on paragraph 36 0Figure 5. “I’m waiting but the cow’s not back.” Source: Martha Nell Smith, “Computing: What Has American Literary Study To Do with It,” American Literature 74, no. 4 (2002): 850.

¶ 37Leave a comment on paragraph 37 0

In a more recent transcription case, the Metafilter weblog community aided a member in deciphering a cryptic series of letters written on an index card.29At 4:13 pm on January 21, 2014, the member posted:

¶ 39Leave a comment on paragraph 39 0

Within fourteen minutes, the mystery was partially solved when another user deciphered the meaning of the writing on one side of an index card. Analysis of the remaining index cards continues, and a considerable amount of text has been decrypted.

¶ 41Leave a comment on paragraph 41 0

MBDA has benefitted similarly. Less than a month after we launched, a Digital Library of Georgia scholar identified a transcription error in an item’s description and contacted me to report it. Because MBDA documents and descriptions are published online and made public, it was not only possible for her, working in Georgia, to find the error, but also for me, working in Pennsylvania, to research and correct it within minutes.

As Smith’s and countless other examples make poignantly clear, “When editors work together to make as much about a text visible to as wide an audience as possible, rather than to silence opposing views or to establish one definitive text over all others, intellectual connections are more likely to be found than lost.”30

¶ 44Leave a comment on paragraph 44 0

In 2010, one of the largest software companies in the world changed its design: “…Microsoft decided that their approach to design was not keeping pace with their users’ expectations and needs. Instead of focusing solely on the features of a product, they decided to rethink their aesthetic approach by focusing more on the user’s experience.”31 During the summer of 2013, Apple, in the wake of other developers, announced plans to eschew its skeuomorphic-based user interface in favor of flat design in iOS 7. These conceptual changes were wrought not solely for aesthetic appeal but in response to the changing needs, experiences, and abilities of users, and they are not without relevance to stakeholders in the archive community.

¶ 45Leave a comment on paragraph 45 0Browse and Search are among the most common user pathways within digital archives.32 While these remain essential to access and to study, neither acknowledges the experience brought to the archive by neo-archivists, whose immersion in participatory environments and whose prowess with new media privileges their ability to do more. And despite all we know about the behaviors and experiences our users bring to us, neither situates the digital archive as a space for collaboration and participation. Design and interface define for our users (and for us) the purpose of the archive and their relationship to it: Are they guests in someone else’s house? Or are they intimate stakeholders?

¶ 46Leave a comment on paragraph 46 0

Because MBDA’s development relies on collaboration with community editors, Participate is featured as a path equal in prominence (i.e. in size, graphics) to others such as Browse, Search, and Learn within the project’s primary navigation and home page. In response to user testing, which revealed the need for recurrent editing on-ramps, community editors can access editing links on high-traffic pages—including Home, Browse, Participate, and Community—as well as on the page of every unedited document. Buttons entitled “show me a random document” and “edit a document” link users directly to a randomly selected document in need of editing (a favorite feature among our test group), enabling them to skip the search or browse step and simply get started.

¶ 47Leave a comment on paragraph 47 0

Editing progress is displayed on the Community page, along with the names of Top and Recent editors, and community contributions are acknowledged through badges linked to users’ profile information and through document-level citation.33 Although we are still learning about the relationships between badging and citation and user participation, analytics reveal that Community is the second-most frequently visited page on the site and the second-most common site entrance point (in both instances, following the home page). By no means, however, do we consider these navigational and participatory facets of MBDA any less dynamic than our documentary holdings. Our decisions aim to recognize the evolving role of users as participants and as partners, and we are working to open our archival doors ever wider.

¶ 49Leave a comment on paragraph 49 0

Alan Liu once described new media encounters as those events that occur in an “unpredictable zone of contact—more borderland than border line—where (mis)understandings of new media are negotiated along twisting, partial, and contradictory vectors.”34 This space, Liu tells us, is like “the tricky frontier around a town where one deals warily with strangers because even the lowliest beggar may turn out to be a god, or vice versa. New media are always pagan media: strange, rough, and guileful; either messengers of the gods or spam.”35

¶ 50Leave a comment on paragraph 50 0

As a kind of collaboratory, MBDA is situated at the boundaries of scholarly and community space. We uphold an established metadata schema, yet extend it to engage users and expand discovery. We preserve our physical collection, yet our digital representations will for most serve as the primary source. We grapple with conventions such as citation and authority, yet we resist a traditional publishing approach. We maintain a dedicated, trained project staff, yet we rely on community partners. We value accuracy and data integrity, yet we acknowledge the probability of error. We publish texts, yet we do not lay claim to any authoritative version.

¶ 51Leave a comment on paragraph 51 0

One might fairly ask of us, and indeed, of any participatory editing project: Are you a messenger of the archival gods, or are you spam?36

¶ 52Leave a comment on paragraph 52 0

When the MBDA project began a few short years ago, participatory editing was uncommon. Many scholars remained skeptical of crowdsourcing, dismissing it as “too Wikipedia like,”37 for all intents and purposes, dismissing it as “spam.” Deliverables appeared uncertain, and the propensity for error and inaccuracy along the path of this “tricky frontier” proved too risky. Today, in a classic nod toward Moore’s Law, the term crowdsourcing boasts 2,510,000 returns on Google. The more genteel participatory (which, according to the OED, was attested as early as 1881) garners 4,320,000. The U.S. National Archives invites “citizen archivists” to help edit its holdings, and the National Endowment for the Humanities has funded development of community transcription tools. Crowdsourced editing methods are not spam; whether they bear a deific message for the archival community remains to be seen.

¶ 53Leave a comment on paragraph 53 0

It is fitting that this discussion closes with the metaphor of boundaries and borders, as we recently began mining our geolocation data. Martha Berry was something of a frontierswoman. Correspondence written between 1926 and 1930 links her to forty-six different states—every state in the nation save two at that time—and to thirteen countries. She maintained correspondence with Nobel laureates and presidents, poor families and the middle class. She wrote to parents grieving sons killed in World War I, industrialists, national leaders, and students. She wrote to critics, actresses, and entomologists. They wrote her back. She was enterprising. By some accounts, she was bold. How do we know this? Through crowdsourcing MBDA.

The intention here is not a technological determinism argument, but one based on usage data, funding, educational change, and signals offered by prevailing cultural norms. [↩]

See Tim Causer and Valerie Wallace, “Building A Volunteer Community: Results and Findings from Transcribe Bentham,” Digital Humanities Quarterly 6, no. 2 (2012), http://www.digitalhumanities.org/dhq/vol/6/2/000125/000125.html; Stuart Dunn and Mark Hedges, “Crowd-Sourcing Scoping Study: Engaging the Crowd with Humanities Research,” Arts and Humanities Research Council: AHRC Connected Communities Theme, 2012, http://crowds.cerch.kcl.ac.uk/. [↩]

It will surprise few readers that in the United States the shift toward participatory culture and the “trend to increasingly active participation” align with the expanding number of Americans accessing the Internet. See Henry Jenkins, Sam Ford, and Joshua Green, Spreadable Media: Creating Value and Meaning in a Networked Culture (Postmillennial Pop) (New York: New York University Press, 2013), 155. While some troubling disparities in access persist, the tendency is overwhelmingly toward increased Internet usage; according to Pew Research Center’s Internet and American Life Project, usage among adults has gone up, from 50 percent in July-August 2000 to 85 percent in August 2012. Given the trajectory suggested by the available data, there is little reason to doubt continued movement in this direction. [↩]

Cathy Davidson and David Theo Goldberg, “The Future of Learning Institutions in a Digital Age,” John D. and Catherine T. MacArthur Foundation Report on Digital Media and Learning (Cambridge: MIT Press, 2009), 2. [↩]

Ibid. The OED attributes the noun crowdsourcing to Wired writer Jeff Howe, who in 2006 described a rising trend wherein “smart companies in industries as disparate as pharmaceuticals and television discover ways to tap the latent talent of the crowd.” [↩]

According to Google Analytics data, as of January 25, 2014, visitors represented fifty different states and fifty-one distinct countries. The average visit duration is sixteen minutes. [↩]

Daren C. Brabham, “What is Crowdsourcing?” Crowdsourcing, A Blog about the Research and Practice of Crowdsourcing, accessed August 14, 2013, http://essentialcrowdsourcing.wordpress.com/overview/. The remaining three are Knowledge Discovery and Management, Broadcast Search, and Peer-Vetted Creative Production. [↩]

For example, Brabham excludes Wikipedia from strict classification within crowdsourcing due to the absence of an overseeing body. [↩]

In the case of the date element, our automations edit the year field only. [↩]

This has created a unique harvesting paradigm, one that requires periodic (rather than discrete) interaction with item metadata. [↩]

For a more detailed discussion, see Stephanie A. Schlitz, “Digital Texts, Metadata, and the Multitude: New Directions in Participatory Editing,” Variants, Forthcoming [↩]

Within the text-encoding community, the TEI Guidelines dedicate the element gap and the attribute reason to precisely this kind of editing difficulty. [↩]

The 1 percent rule suggests that while 99 percent of web users are spectators, 1 percent are active participants. For MBDA, this appears in general to hold true, both in terms of participation in the editing process and in terms of quality assurance, as the contributions of a small corps of project and community editors are benefitting the remaining users. For a recent discussion of the 1 percent rule as it applies to citizen science, see Caren Cooper, “Zen in the Art of Citizen Science: Apps for Collective Discovery and the 1 Percent Rule of the Web,” Scientific American, September 11, 2013, http://blogs.scientificamerican.com/guest-blog/2013/09/11/zen-in-the-art-of-citizen-science-apps-for-collective-discovery-and-the-1-rule-of-the-web/. [↩]

Martha Nell Smith, “Computing: What Has American Literary Study To Do with It,” American Literature 74, no. 4 (2002): 833-57. [↩]

Research and teaching resources figure prominently on the US National Archives homepage, as does Shop Online. But nested within the homepage’s submenu is a link to the Citizen Archivist Dashboard. And I cannot help but wonder, “What would happen if the National Archives privileged – in typography, graphics, and position – intellectual over fiscal contribution?” [↩]

As described in my forthcoming article in Variants, in the absence of an existing citation model for participatory editing, ours is openly experimental. [↩]

Allen Liu, “Imagining the New Media Encounter,” in A Companion to Digital Literary Studies, ed. Ray Siemens and Susan Schreibman (Malden, MA: Blackwell, 2008), http://www.digitalhumanities.org/companionDLS/. [↩]

Amid the compelling examples of Wikipedia as one of the most successful participatory undertakings is its broadened acceptance in academic circles. Where once many educators and scholars not only frowned upon it but disallowed it as a reference, now it is a widely used and accepted resource. Among the many, many (many) examples of scholars engaging Wikipedia are Postcolonial Digital Humanities and #tooFEW. [↩]

Stephanie Schlitz

Associate Professor of Linguistics– Bloomsburg University of Pennsylvania

Participatory Culture, Participatory Editing, and the Emergent Archival Hybrid

By Stephanie Schlitz

April 2014

¶ 1 Leave a comment on paragraph 1 0 1. Introduction

¶ 2 Leave a comment on paragraph 2 0 The collocate digital archive is today more familiar and arguably more relevant to the general public than the archaic archive with its attendant limitations. Following the traditional definition—“A place in which public records or other important historic documents are kept”1 or “A historical record or document so preserved”2 —the archive is an artifact of erudition. Documents are “kept”3 and “preserved” by the archivist, the “keeper of archives,”4 a “specially trained” individual with “broad deep knowledge about records” and “extensive research and analysis skills.”5

¶ 3 Leave a comment on paragraph 3 0 While these descriptions retain some relevance (by no means does this paper intend to slight the importance of preservation, to dismiss the significance of the material artifact, or to disregard the necessity of expertise), they do not resonate within digital environments and they are inconsistent with the participatory practices that are transforming cultural norms, education, and scholarship. Today, everyone is—or is potentially—an archivist. Hui has argued, “We are archivists, since we have to be. We don’t have choice. This decision is already made, or determined by the contemporary technological condition.”6 Web 2.0 tools such as Delicious, Facebook, Twitter, Instagram, and Pinterest have engaged us in the archivist-like behaviors of collecting, collating, describing, and disseminating. They have fostered curation and sharing of digital objects (photos, tweets, posts, news, etc.) into heterogeneous collections comprising a range of media types (image, text, video, audio, etc.), practices that not only underpin socialization and collaboration on the web, but also development of digital archives.

¶ 4 Leave a comment on paragraph 4 0 Even as born-digital, user-generated, and other content is being curated and archived by the emerging neo-archivist public, the traditional archive is evolving. No longer is it exclusively material, static, and scholarly. Increasingly, it is digital, dynamic, and participatory. But despite significant advances, including those implied by the suggestive new collocate digital archive and by techno-cultural innovation, cultural heritage collections remain extant in wildly ranging conditions. Some are well cataloged and pristinely preserved; others are stacked in boxes and stored in attics. Some are locked behind closed doors and inaccessible; others are freely available on the web. And just as traditional archives can deter rather than invite public visitors, so too can digital archive interfaces. The archival community and its extended body of stakeholders (in library and information science and digital humanities) is responding to the fundamental and irrevocable7 shift being driven by digital technology, and groundbreaking work is being done to improve preservation and access.8 Yet, there remains much to discuss from a methodological perspective and considerably more to do. This discussion explores the emerging intersections between archives, social curation, and participatory culture, drawing on development of the Martha Berry Digital Archive (MBDA) as its primary example.

¶ 5 Leave a comment on paragraph 5 0 Held within the climate-controlled rooms of the Berry College Archives, the Martha Berry (MB) Collection is not exactly stacked in boxes or stored in an attic. But it is large, comprising more than two hundred file boxes of manuscript and typescript documents. At the onset of the MBDA project, the collection was poorly indexed, had never been adequately studied, and, due to the slow fire effect that diminished paper quality, was in some instances literally crumbling on the shelves. It was on the verge of obsolescence, and the MBDA project team was challenged to identify an approach that would enable us to preserve and discover it. Our response: Crowdsourcing.

¶ 6 Leave a comment on paragraph 6 0 Before I proceed, I should make clear that advances in technology notwithstanding, a large collection of unedited historical documents is not necessarily in want of a digital archive. Our decision to digitize the Martha Berry Collection stemmed from a longstanding interest in the early twentieth-century educator and philanthropist for which it is named, and in the social and historical events which, at least in part, define her:

¶ 7 Leave a comment on paragraph 7 0 Martha Berry was born in 1866 to wealthy parents in the American South. She enjoyed a privileged upbringing and was likely expected, like many women of her era and position, to marry and to devote her life to family and social obligations. She chose instead to commit her inheritance to founding a school, and the majority of her adult life was dedicated to ensuring its growth and success.9

¶ 8 Leave a comment on paragraph 8 0 To support the school, Berry established ties with influential friends and benefactors across the United States. The MB Collection documents these efforts, and it includes rich and varied personal and business correspondence written between 1902 and 1941. The collection offers a distinctly American perspective on international as well as continent-specific themes. These range from The Great War and women’s rights to educational reform and the Depression Era. Berry’s own life is somewhat enigmatic, and the mystery surrounding her decision to remain unmarried, her stance as a suffragette and feminist, and her longstanding relationship with Henry and Clara Ford, among other topics, lend elements of intrigue to her story. If we want to learn more about Martha Berry and the history preserved in the MB Collection, we must index the collection. As the following discussion infers, our methods for doing so are experimental, and we acknowledge that methodological innovation is as crucial to this project as our subject.

¶ 9 Leave a comment on paragraph 9 0 2. Methodological Underpinnings

¶ 10 Leave a comment on paragraph 10 0 MBDA took its methodological cue from pioneering research, which in the last ten years has detailed a series of fundamental shifts taking place in teaching, learning, and scholarly practices. In 2006, for example, Jenkins et al. published a white paper, “Confronting the Challenges of Participatory Culture: Media Education for the 21st Century.”10 Although focused primarily on connections between literacy, education, and the social and collaborative practices of teens, the report’s broader significance was unmistakable. “We are,” the authors observed, “moving away from a world in which some produce and many consume media, toward one in which everyone has a more active stake in the culture that is produced.”11 We are moving, in other words, toward a participatory culture.12

¶ 11 Leave a comment on paragraph 11 0 In 2009, extending earlier findings on participatory culture, Davidson and Goldberg described an emergent model of participatory learning that “includes the many ways that learners (of any age) use new technologies to participate in virtual communities where they share ideas, comment on one another’s projects, and plan, design, implement, advance, or simply discuss their practices, goals, and ideas together.”13 According to Davidson and Goldberg, “Participatory learning begins from the premise that new technologies are changing how people of all ages learn, play, socialize, exercise judgment, and engage in civic life. Learning environments—peers, family, and social institutions (such as schools, community centers, libraries, museums, even the playground, and so on)—are changing as well.”14 University College London launched Transcribe Bentham in 2010. The project’s participatory transcription methodology received international attention,15 advancing the position that community contributions have a place in scholarly endeavors.

¶ 12 Leave a comment on paragraph 12 0 When the MBDA project was initiated shortly thereafter, the term crowdsourcing was barely four years old and was already beginning to spread (in practice as well as in parlance) beyond its industry origins to touch even the most conservative realms of academia, including textual scholarship and archival studies. In June 2013, crowdsourcing made its Oxford English Dictionary (OED) Online debut as “one of the most recent 1% of entries recorded in OED” and among “50 entries first evidenced in the decade 2000.”16 Less than ten years after its coinage, the trend toward crowdsourcing and the underlying shift toward participatory culture are patently evident. Crowdsourcing methods have been adopted by museums, scientists, archives, educators, and others who recognize the convergence of academic and community spaces, behaviors, and goals, and the methods span disciplinary as well as national boundaries.17

¶ 13 Leave a comment on paragraph 13 0 3. Crowdsourcing the MB Collection

¶ 14 Leave a comment on paragraph 14 0 MBDA is a free and open digital archive. It requires no subscription. Its holdings are released under a Creative Commons license. Its content is community driven and dynamic. MBDA does not follow the conventional publishing model in which archival materials are first described and edited by archivists and scholars, then made available to researchers, students, and others. MBDA assimilates editing tasks within the digital archive interface, and editing and dissemination occur in coalescence rather than in sequence.

¶ 15 Leave a comment on paragraph 15 0 To date, MBDA has disseminated over ten thousand documents. Nearly 50 percent of these contain complete document descriptions, and many more are in queue to be imaged, uploaded, published, and described. Although the archive is available for teaching, research, and other study and has been accessed thousands of times by visitors from across the United States and abroad,18 it not only remains in development but, because it intentionally blurs the line between publishing and editing, is designed to continue in this state indefinitely.

¶ 16 Leave a comment on paragraph 16 0 Brabham has defined crowdsourcing as “an online, distributed problem solving and production model that leverages the collective intelligence of online communities for specific purposes.”19 He delineates four types of crowdsourcing, among these, Distributed Human Intelligence Tasking (numeration supplied):

¶ 17 Leave a comment on paragraph 17 0 The Distributed Human Intelligence Tasking (DHIT) crowdsourcing approach concerns information management problems where (1) the organization has the information it needs in-hand but (2) needs that batch of information analyzed or processed by humans. (3) The organization takes the information, decomposes the batch into small “microtasks,” and (4) distributes the tasks to an online community willing to perform the work. This method is ideal for data analysis problems not suitable for efficient processing by computers.20

¶ 18 Leave a comment on paragraph 18 0 Although Brabham’s definition of crowdsourcing can be problematically narrow,21 DHIT nonetheless offers an opportune methodological point of reference. The following thus details MBDA’s methodology as it corresponds with DHIT:

¶ 19 Leave a comment on paragraph 19 0 (1) We have the needed information: Documents in the MB Collection.

¶ 20 Leave a comment on paragraph 20 0 (2) We need to catalog the collection and we need document-level as well as project-specific metadata.

¶ 21 Leave a comment on paragraph 21 0 (3) To gain metadata, we developed a set of editing microtasks.

¶ 22 Leave a comment on paragraph 22 0 (4) When users search or study MBDA, they are invited to assume the role of project partner by participating in editing microtasks.

¶ 23 Leave a comment on paragraph 23 0 Consistent with archival best practices, MBDA follows the Dublin Core (DC) Metadata Initiative’s specifications for document description. Within the MB Collection, several collection-level attributes are held in common among all documents, so editing of seven of the fifteen elements in the DC metadata element set (source, publisher, rights, language, format, identifier, and coverage) can be automated,22 enabling us to complete an initial cataloging step. However, to depict document-level nuances and to facilitate search and sort, additional, document-exclusive descriptors are necessary. MBDA requires descriptions for six of the remaining seven DC elements (title, description, date, creator, subject, and type).

¶ 24 Leave a comment on paragraph 24 0 Because the MB Collection is primarily epistolary, we are interested in the names of document recipients as well as authors; for that reason, we defined the item type correspondence and within it the element recipient. And because we need to solve next-stage editing challenges, we defined the element set crowdsourcing and within it the element flag for review (which enables participants to signal important documents, editing errors, and other document problems), and the element script type (which enables participants to distinguish manuscript from typescript documents, a detail important to later-stage transcription and OCR). Editing each of these elements relies on careful, individualized document review. Finally, because we were interested in informing understanding of correspondence with geographical data, we integrated geolocation into our element set. Thus, ten elements—six DC (title, description, date, creator, subject, and type) and four project-specific (recipient, flag for review, script type, and geolocation)—are subsumed by MBDA as editing microtasks.

¶ 25 Leave a comment on paragraph 25 0 Underlying MBDA is a standards-based archival infrastructure comprising (at a foundational level) a customized, themed instance of Omeka and digital items with linked DC metadata. MBDA’s Crowd-Ed plugin, which enables participatory metadata editing and which manages user authentication, authorization, auditing, and recognition, was built using Omeka’s plugin API and was designed to create a correlation between each question on the public editing interface and either a DC metadata element or a project-specific descriptor (see Figures 1 and 2, below). DC element field designations on the public editing interface are masked for user-friendliness and familiarity (e.g. the DC element creator alias is author), obscuring on the front-end the back-end alignment with the underlying DC elements, and thereby freeing editors to focus on content rather than on a potentially obscure data model. DC as well as custom element metadata are stored in the archive database for long-term preservation and displayed immediately on the front-end for project staff and project-participant review and revision, search, and discovery.

¶ 26 Leave a comment on paragraph 26 0 Figure 1. Screen capture of the editing interface; the editing fields correspond with DC or MBDA specific elements as follows: Item Type: DC Type; Script Type: MBDA specific; Date: DC Date; Title: DC Title; Description: DC Description; Author: DC Creator (not shown); Recipient: MBDA specific (not shown).

Figure 1. Screen capture of the editing interface; the editing fields correspond with DC or MBDA specific elements as follows: Item Type: DC Type; Script Type: MBDA specific; Date: DC Date; Title: DC Title; Description: DC Description; Author: DC Creator (not shown); Recipient: MBDA specific (not shown).

¶ 27 Leave a comment on paragraph 27 0 Figure 2. Screen capture of the lower section of the editing interface; the editing fields correspond with DC elements as follows: Tag: DC Subject; Location: MBDA specific; Flag for Review: MBDA specific.

Figure 2. Screen capture of the lower section of the editing interface; the editing fields correspond with DC elements as follows: Tag: DC Subject; Location: MBDA specific; Flag for Review: MBDA specific.

¶ 28 Leave a comment on paragraph 28 0 MBDA’s participatory approach extends the how of metadata capture, not the underlying structured and standards-based data model recognized across digital archive projects and platforms. Each digital item therefore remains portable and extensible, and the project utilizes an Open Archives Initiative Protocol for Metadata Harvesting plugin to expose and share items with other digital archives and libraries. MBDA was designed in compliance with Digital Library of Georgia (DLG) standards and is included as a collection-level record within DLG. Because DLG is a Digital Public Library of America (DPLA) partner, MBDA data, harvested by DLG,23 is available not only to users of DLG, but also to users of DPLA.

¶ 29 Leave a comment on paragraph 29 0 Challenging Existing Conventions

¶ 30 Leave a comment on paragraph 30 0 One of the decisive challenges of a participatory project such as MBDA lies in its two-fold purpose: editing and publishing. These are not discrete, and MBDA’s experimental editing and publishing model exploits the critical intersections between the two. The project invites individuals to visit and use the archive and to edit documents while doing so because MBDA is augmented in richness of content and utility through this hybrid approach.

¶ 31 Leave a comment on paragraph 31 0 To guide community contributions and to minimize inaccuracy, MBDA uses controlled vocabularies for elements such as script type and format and employs recognizable dropdown and form field interfaces for elements such as date, title, and description.24 In addition to entering metadata, participants can and do correct inaccuracies introduced by other editors, complete incomplete edits, add additional description, and flag documents for review. Until a project staff member reviews and locks items to prevent further editing, documents remain unlocked and open to editing by authenticated users.

¶ 32 Leave a comment on paragraph 32 0 Yet even as our open, community-driven editing model addresses archival challenges, it introduces new ones—to accuracy in particular—and some mistakes must be anticipated. Cursive handwriting, for instance, widespread in use in the early decades of the twentieth century, is today taught in fewer and fewer schools, and readers are less and less practiced in deciphering script. Errors in transcription of cursive (and cursive itself as an impediment to transcription) therefore are expected. Indeed, among the reasons to flag an item for review on the editing page is “I can’t read the handwriting.”

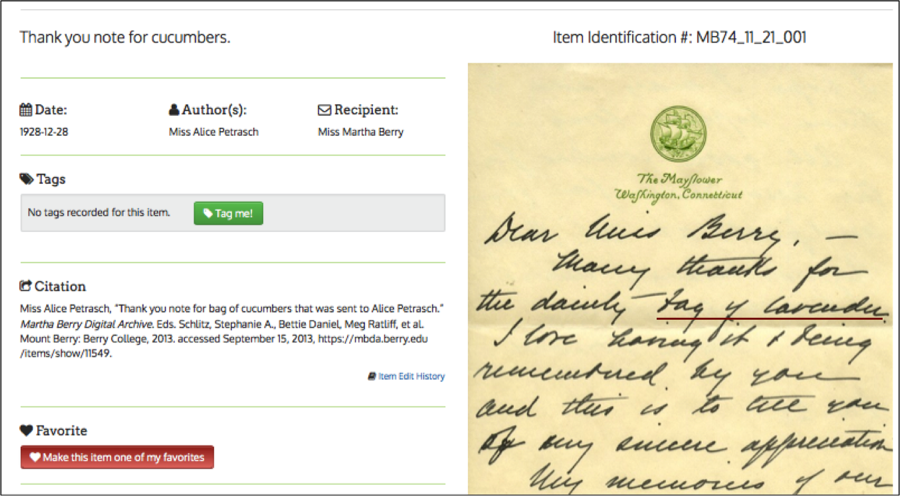

¶ 33 Leave a comment on paragraph 33 0 Figure 3. “Cucumber.”

Figure 3. “Cucumber.”

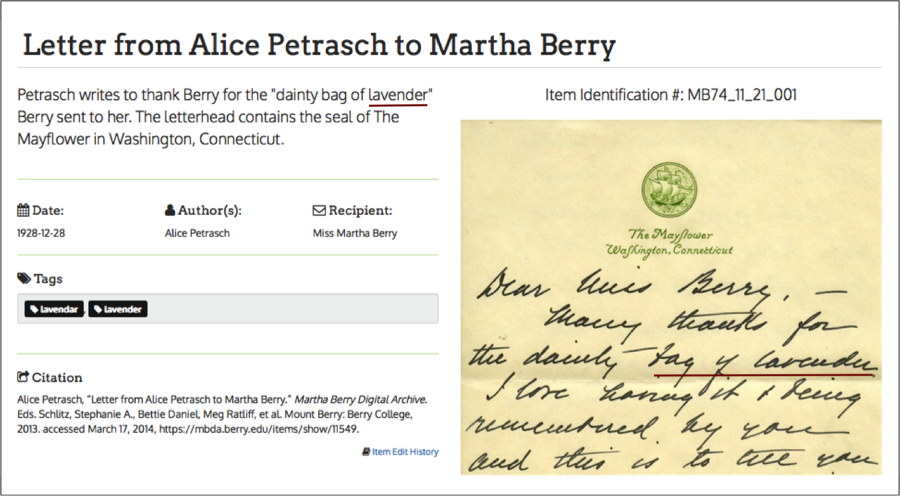

¶ 34 Leave a comment on paragraph 34 0 Figure 4. “Lavender.”

Figure 4. “Lavender.”

¶ 35 Leave a comment on paragraph 35 0 Figures 3 and 4 above illustrate a community editor’s misinterpretation of lavender, with the transcription cucumber supplied in its place. Project staff and regular editors can often spot this kind of misanalysis, not because all are experts in early twentieth-century handwriting, but because of their familiarity with the themes and tropes common to the collection (for example, Berry often sent lavender and lavender sachets as gifts; it is also worth noting that the spelling lavender occurs in variation with lavendar in the correspondence). Although they edit such descriptions, it is realistic to expect, so long as items remain open for editing, and in some instances even after they are locked, gaps in legibility and interpretation to frustrate complete and accurate description.25

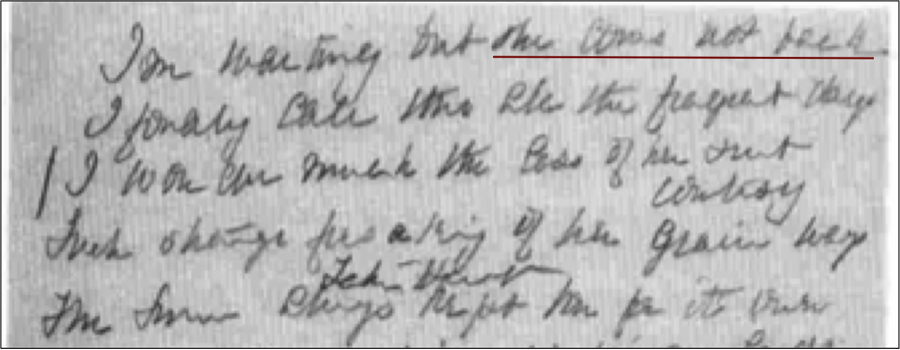

Just as online collaboration can introduce error, it can also reduce it,26 and as Martha Nell Smith has shown, exposure of errata can prove crucial to emendation.27 Editor of the Dickinson Electronic Archives, Smith has credited collaboration with correction of a transcription error in which she posited, “I’m waiting but the cow’s not back,” for a difficult line in a poem by Susan Dickinson (see Figure 5, below). The analysis was historically as well as textually motivated and, in an isolated, traditional, scholarly setting, sensible. Smith’s interpretation received acclaim from scholars who praised the connection made between the challenging line and its putative referent. Later, however, after a copy of the source manuscript was published online and Smith’s transcription collaboratively reviewed, she learned that her version was inaccurate, that the line was actually “I’m waiting but she comes not back.” The lesson, as Smith describes it, is clear: “Had editing of Writings of Susan Dickinson remained a conventional enterprise, the error of what I had deemed and what others had received as fact might have remained inscribed in literary history for years.”28

¶ 36 Leave a comment on paragraph 36 0 Figure 5. “I’m waiting but the cow’s not back.” Source: Martha Nell Smith, “Computing: What Has American Literary Study To Do with It,” American Literature 74, no. 4 (2002): 850.

Figure 5. “I’m waiting but the cow’s not back.” Source: Martha Nell Smith, “Computing: What Has American Literary Study To Do with It,” American Literature 74, no. 4 (2002): 850.

¶ 37 Leave a comment on paragraph 37 0 In a more recent transcription case, the Metafilter weblog community aided a member in deciphering a cryptic series of letters written on an index card.29At 4:13 pm on January 21, 2014, the member posted:

¶ 38 Leave a comment on paragraph 38 0 My grandmother passed away in 1996 of a fast-spreading cancer. She was non-communicative her last two weeks, but in that time, she left at least 20 index cards with scribbled letters on them. My cousins and I were between 8-10 years old at the time, and believed she was leaving us a code. We puzzled over them for a few months trying substitution ciphers, and didn’t get anywhere.

¶ 39 Leave a comment on paragraph 39 0 Within fourteen minutes, the mystery was partially solved when another user deciphered the meaning of the writing on one side of an index card. Analysis of the remaining index cards continues, and a considerable amount of text has been decrypted.

¶ 40 Leave a comment on paragraph 40 0 Figure 6. MBDA Tweet to DLG.

Figure 6. MBDA Tweet to DLG.

¶ 41 Leave a comment on paragraph 41 0 MBDA has benefitted similarly. Less than a month after we launched, a Digital Library of Georgia scholar identified a transcription error in an item’s description and contacted me to report it. Because MBDA documents and descriptions are published online and made public, it was not only possible for her, working in Georgia, to find the error, but also for me, working in Pennsylvania, to research and correct it within minutes.

As Smith’s and countless other examples make poignantly clear, “When editors work together to make as much about a text visible to as wide an audience as possible, rather than to silence opposing views or to establish one definitive text over all others, intellectual connections are more likely to be found than lost.”30

¶ 43 Leave a comment on paragraph 43 0 4. A Critical Reframing

¶ 44 Leave a comment on paragraph 44 0 In 2010, one of the largest software companies in the world changed its design: “…Microsoft decided that their approach to design was not keeping pace with their users’ expectations and needs. Instead of focusing solely on the features of a product, they decided to rethink their aesthetic approach by focusing more on the user’s experience.”31 During the summer of 2013, Apple, in the wake of other developers, announced plans to eschew its skeuomorphic-based user interface in favor of flat design in iOS 7. These conceptual changes were wrought not solely for aesthetic appeal but in response to the changing needs, experiences, and abilities of users, and they are not without relevance to stakeholders in the archive community.

¶ 45 Leave a comment on paragraph 45 0 Browse and Search are among the most common user pathways within digital archives.32 While these remain essential to access and to study, neither acknowledges the experience brought to the archive by neo-archivists, whose immersion in participatory environments and whose prowess with new media privileges their ability to do more. And despite all we know about the behaviors and experiences our users bring to us, neither situates the digital archive as a space for collaboration and participation. Design and interface define for our users (and for us) the purpose of the archive and their relationship to it: Are they guests in someone else’s house? Or are they intimate stakeholders?

¶ 46 Leave a comment on paragraph 46 0 Because MBDA’s development relies on collaboration with community editors, Participate is featured as a path equal in prominence (i.e. in size, graphics) to others such as Browse, Search, and Learn within the project’s primary navigation and home page. In response to user testing, which revealed the need for recurrent editing on-ramps, community editors can access editing links on high-traffic pages—including Home, Browse, Participate, and Community—as well as on the page of every unedited document. Buttons entitled “show me a random document” and “edit a document” link users directly to a randomly selected document in need of editing (a favorite feature among our test group), enabling them to skip the search or browse step and simply get started.

¶ 47 Leave a comment on paragraph 47 0 Editing progress is displayed on the Community page, along with the names of Top and Recent editors, and community contributions are acknowledged through badges linked to users’ profile information and through document-level citation.33 Although we are still learning about the relationships between badging and citation and user participation, analytics reveal that Community is the second-most frequently visited page on the site and the second-most common site entrance point (in both instances, following the home page). By no means, however, do we consider these navigational and participatory facets of MBDA any less dynamic than our documentary holdings. Our decisions aim to recognize the evolving role of users as participants and as partners, and we are working to open our archival doors ever wider.

¶ 48 Leave a comment on paragraph 48 0 5. New Frontiers, New Discoveries

¶ 49 Leave a comment on paragraph 49 0 Alan Liu once described new media encounters as those events that occur in an “unpredictable zone of contact—more borderland than border line—where (mis)understandings of new media are negotiated along twisting, partial, and contradictory vectors.”34 This space, Liu tells us, is like “the tricky frontier around a town where one deals warily with strangers because even the lowliest beggar may turn out to be a god, or vice versa. New media are always pagan media: strange, rough, and guileful; either messengers of the gods or spam.”35

¶ 50 Leave a comment on paragraph 50 0 As a kind of collaboratory, MBDA is situated at the boundaries of scholarly and community space. We uphold an established metadata schema, yet extend it to engage users and expand discovery. We preserve our physical collection, yet our digital representations will for most serve as the primary source. We grapple with conventions such as citation and authority, yet we resist a traditional publishing approach. We maintain a dedicated, trained project staff, yet we rely on community partners. We value accuracy and data integrity, yet we acknowledge the probability of error. We publish texts, yet we do not lay claim to any authoritative version.

¶ 51 Leave a comment on paragraph 51 0 One might fairly ask of us, and indeed, of any participatory editing project: Are you a messenger of the archival gods, or are you spam?36

¶ 52 Leave a comment on paragraph 52 0 When the MBDA project began a few short years ago, participatory editing was uncommon. Many scholars remained skeptical of crowdsourcing, dismissing it as “too Wikipedia like,”37 for all intents and purposes, dismissing it as “spam.” Deliverables appeared uncertain, and the propensity for error and inaccuracy along the path of this “tricky frontier” proved too risky. Today, in a classic nod toward Moore’s Law, the term crowdsourcing boasts 2,510,000 returns on Google. The more genteel participatory (which, according to the OED, was attested as early as 1881) garners 4,320,000. The U.S. National Archives invites “citizen archivists” to help edit its holdings, and the National Endowment for the Humanities has funded development of community transcription tools. Crowdsourced editing methods are not spam; whether they bear a deific message for the archival community remains to be seen.

¶ 53 Leave a comment on paragraph 53 0 It is fitting that this discussion closes with the metaphor of boundaries and borders, as we recently began mining our geolocation data. Martha Berry was something of a frontierswoman. Correspondence written between 1926 and 1930 links her to forty-six different states—every state in the nation save two at that time—and to thirteen countries. She maintained correspondence with Nobel laureates and presidents, poor families and the middle class. She wrote to parents grieving sons killed in World War I, industrialists, national leaders, and students. She wrote to critics, actresses, and entomologists. They wrote her back. She was enterprising. By some accounts, she was bold. How do we know this? Through crowdsourcing MBDA.

Stephanie Schlitz

Associate Professor of Linguistics– Bloomsburg University of Pennsylvania

Footnotes

License

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License.

Share