Applying Theoretical Archival Principles and Policies to Actual Born-Digital Collections

By

Leigh Rosin

November 2014

¶ 1Leave a comment on paragraph 1 0

Few archivists would dispute the need to maintain archival integrity and adhere to the principles of provenance and original order when working with heritage collections. These principles are so well established and inherent that it has generally been assumed archivists would apply them to ALL formats in their collections, including those that are born digital. However, what happens when one tries to apply such traditional archival principles to actual born-digital collections? This article will outline the process, the challenges, and the lessons learned while applying the National Library of New Zealand’s archival and digital preservation policies on one particular unpublished, born-digital collection of theater and performing-arts papers. It is an excellent example of what happens when one attempts to apply traditional archival theory in the digital world. In this particular case, applying such principles enabled staff to identify how existing tools and processes could be improved, in addition to highlighting the complex and labor-intensive nature of documenting provenance and original order for born digital collections.

1. Introduction

1.1 The Organizational Context

¶ 2Leave a comment on paragraph 2 0

Since the mid-1990s the National Library of New Zealand (NLNZ) has been actively acquiring born digital heritage collections, and in 2008 it went live with its National Digital Heritage Archive (NDHA). The NDHA encompasses several ingest and access delivery components and utilizes the Ex Libris digital preservation software, Rosetta, where collections are stored for long-term retention. Following an initial technical analysis by digital archivists, unpublished digital collections are appraised; if a decision is made to retain them, then they are arranged (if necessary) and described. The work is highly collaborative and often requires input from several stakeholder groups, including digital preservation analysts, policy analysts, curators, and arrangement and description librarians.

¶ 3Leave a comment on paragraph 3 0

The NLNZ collects a variety of digital items, including unpublished materials (photographs, cartoons, private papers, and oral histories) and published materials (websites, electronic publications, and music). Digital items are collected under the National Library Act1 and acquired by purchase or donation. This paper will focus on unpublished collections.

1.2 The Policy Context

¶ 4Leave a comment on paragraph 4 0

In 2011 the NLNZ, in collaboration with Archives New Zealand (ANZ), began the process of establishing policies to support its digital ingest and preservation activities. The one that had the greatest impact and relevance for ingest activities was the Preconditioning Policy, which states, “The diverse nature of digital content means that there are times when it is desirable to make changes to it before it is ingested into the preservation system. These changes are classed under the term ‘preconditioning.’”2 The policy’s goal is to “describe the limits of change that can be introduced to digital content from the time it is brought within the control of Archives or the Library to its acceptance into the preservation system.”3 These changes can include an alteration to (or the addition of) file extensions to better facilitate file format identification and/or the removal of unsupported characters in the file name; this enables more stable storage of the files in the preservation system’s storage database. Regardless of the change being imposed, one must be able to demonstrate that the action will not affect the intellectual content of the file. What is more, two key operating rules must be followed: “All changes must be reversible,” and all changes must have “a system-based provenance note that clearly describes the change that has been made to the file.”4

¶ 5Leave a comment on paragraph 5 0

The business and technological context that made such a policy necessary for the NLNZ and ANZ is worth examining. All files ingested into the preservation system go through the Validation Stack (VS) in Rosetta.5 This is a series of technical checks run against all files, where those with missing or incorrect file extensions will fail the VS and be re-routed to a technical workbench area for assessment. Within this workbench, there are limits to the actions one can perform and the tools one can use. The NLNZ has found that for large collections requiring a complex series of fixes, it is more efficient to perform these actions prior to upload. Another consequence is that the files in the preservation system will be in a relatively stable and “clean” state, which the NLNZ and ANZ hope will make them easier to report on and identify later. Such preconditioning activities might also make future file format transformations for preservation easier and less labor intensive.

¶ 6Leave a comment on paragraph 6 0

In addition to this digital preservation policy, the NLNZ also adheres to such well-established archival principles as “original order,”6 “provenance,” and “conservation.”7 The concept of original order—“[t]he order in which records and archives were kept when in active use”—is of particular relevance when processing digital collections.8 While there is no explicit policy statement that this principle must be applied to digital collections at the NLNZ, business procedures have naturally evolved to include it. Similarly, the concept of “authenticity” is relevant when processing digital collections and has influenced the NLNZ’s approach. Maria Guercio summarizes its relevance to digital collections: “The authenticity of a record, or rather the recognition that it has not been subject to manipulation, forgery, or substitution, entails guarantees of the maintenance of records across time and space (that is, their preservation and transmission) in terms of the provenance and integrity of records previously created.”9 All of these preservation and archival policies and concepts govern how the NLNZ ingests and manages digital collections. As the following case study will demonstrate, such policies have a significant impact on workflows, staff resourcing, and business processes.

2. Case Study: Processing a Collection of Theater-Company Records

¶ 7Leave a comment on paragraph 7 0

In 2008, the NLNZ received a donation of records from a Wellington-based theater company. The collection was made up of seven boxes, covering the period 1990-2008. The donation included both digital and non-digital collection items and was made up of posters, scripts, production notes, photographs, publicity materials, and administrative records. The digital component of the collection was made up of fifty-five disks, which included Mac- and IBM-formatted 3.5-inch floppy disks, double density 3.5-inch floppy disks, and zip disks.

¶ 11Leave a comment on paragraph 11 0

Once all fifty-five disks were analyzed, 923 of the 1,530 total files were migrated from the original media and put into temporary storage on a secure server to await further technical and curatorial analysis. In order to migrate the files from their original media, a number of different disk drives and operating systems were utilized.10

2.2 Technical Analysis of the Files

¶ 12Leave a comment on paragraph 12 0

NLNZ’s digital preservation analyst then undertook a technical assessment of the files, using several tools to identify the file formats.11 This analysis revealed that while most files could be opened and read, they did have various technical issues. These included file extension problems (missing or incorrect file extensions) and file naming problems (illegal/undesirable characters or full stops in the file name).12 Of the 923 files being retained by the library, 700 (76 percent) had some kind of technical issue. The digital archivists, digital preservation analyst, and curator of the content began to plan how to address these problems. They identified a number of activities as preconditioning actions, to be performed before the files were ingested into Rosetta. Prior to conducting each preconditioning action on the original files, copies were tested to determine if the fix applied would affect the intellectual content held within the files and if it would be reversible, in accordance with the Preconditioning Policy.((A variety of methods were employed to ensure the intellectual content was preserved following preconditioning activities. These included running checksums and performing rendering checks (ensuring the file can be opened in the target application and behaves as expected).)) The technical assessment, in addition to revealing technical issues, also found that the files were arranged in a particular folder structure that the theater company had created, which grouped files by theater production, date, and record function.

2.3 Applying Preconditioning Actions

¶ 13Leave a comment on paragraph 13 0

Copies of the 700 files that required preconditioning activities were passed to the digital preservation analyst for processing. A range of tools were used to add/change file extensions and remove/replace undesirable characters from the file name.13 A Python script was created to generate a detailed report indicating the original file name, the new file name, the original file path, and the change(s) applied to each file. Based on the preconditioning action applied, the script automatically generated a brief statement describing the action taken. At the NLNZ this statement is called a “provenance note” and forms part of a file’s core metadata. Provenance notes are created during the upload process using the NLNZ’s ingest tool, INDIGO.14

2.4 Preparing to Upload the Files: Policy Dependencies

¶ 14Leave a comment on paragraph 14 0

As each preconditioning action must be reversible and well documented, each file must be uploaded with enough metadata to describe its preconditioning actions in such a way that they can be undone, should it prove necessary to re-create the file exactly as it was when it arrived at the library. Another policy dependency is an archival one concerning original order. The library received the files in a particular folder structure, which provided important archival context to the collection. While the files would not be stored within the database in their original order, it was determined that the original folder path of each file was a key piece of information that should be captured as part of its object metadata.15

¶ 15Leave a comment on paragraph 15 0

In the interest of better reporting on preconditioning actions in the future, a policy decision was made that a different provenance note should be created for each preconditioning activity applied. In addition, a business rule was established identifying a syntax for provenance notes so that all notes are phrased in the same way. Each note begins with a standardized prefix, so that preservation staff will be able to easily report on (and retrieve) objects on which the same types of provenance activities were performed. For example:

¶ 18Leave a comment on paragraph 18 0

This file was missing an extension and had unsupported characters in the file name. Three preconditioning actions were applied to it, requiring three distinct provenance notes:

002_File extension was added: The file was submitted by the donor without a file extension. A .mcw extension was added to this filename by the digital preservation analyst as it has been identified as Microsoft Word for Macintosh document version 4.0.

003_Special character(s) were removed from the file name: The file name was submitted with forward slashes as part of the file name. On the recommendation of the digital preservation analyst these have been removed and replaced with underscores.

003_Special character(s) were removed from the file name: The file name was submitted with a full stop as part of the file name. On the recommendation of the digital preservation analyst this has been removed and replaced with a hyphen.

2.5 Preparing to Upload the Files: Technological Dependencies

¶ 20Leave a comment on paragraph 20 0

Even though INDIGO does not impose limits on how many files can be loaded in a single deposit, the ingest tool has several technological dependencies that affect how files can be uploaded:

All files in a single deposit must share the SAME provenance, descriptive and administrative metadata.

In order to capture a file’s original path, the file must be loaded from within its original folder structure.

2.6 Uploading the Files

¶ 22Leave a comment on paragraph 22 0

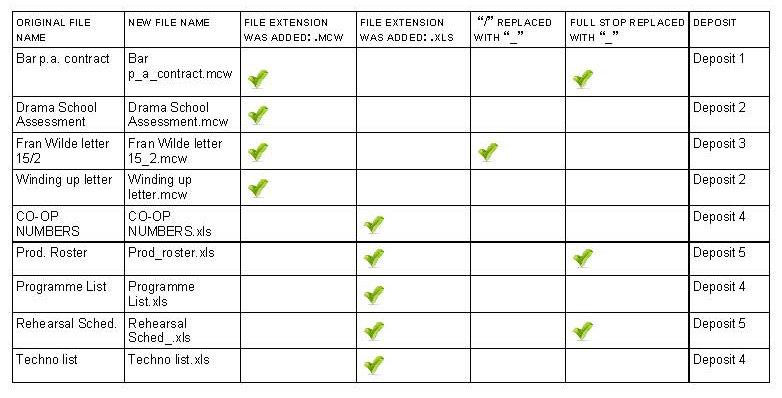

Under the above technological and policy constraints, files were uploaded into Rosetta using INDIGO. A great deal of planning was required to ensure that this was done according to their original file path, as well as to the preconditioning action that was applied. Take, for example, a group of nine files from the \FRINGE\FRINGE FEST 93\PRODUCTION folder, as illustrated in Table 2:

¶ 23Leave a comment on paragraph 23 0Table 2: Analysis of the variety of preconditioning actions applied to a single folder of files and implications for uploading.

¶ 24Leave a comment on paragraph 24 0

Due to the variety of actions applied, this small group of nine files required five unique deposits. The loading progressed in this way, with the 923 files eventually requiring a total of 118 unique deposits. As a result, this relatively small collection took one staff member a total of 52 hours to upload into Rosetta.

2.7 Case-study Analysis

¶ 25Leave a comment on paragraph 25 0

In addition to the policy and technological constraints that affected ingest and upload activities, there were several staff-related factors that, while not the focus of this paper, contributed to the time-consuming nature of this project.16 Ultimately, it took a total of five years to complete the processing of this collection: one to appraise; one to describe; two and a half to do disk migration, technical analysis, and preconditioning activities; and six months to upload the files and metadata.

2.7.1 Areas of Potential Enhancement

¶ 26Leave a comment on paragraph 26 0

In processing this collection, the library identified a number of areas in which its tools, models, or processes could be enhanced to better support the tasks required. First, the NLNZ identified how its ingest tool could be enhanced to create greater efficiencies. We have recently re-developed INDIGO to apply provenance notes and descriptive metadata at a more granular level and in an automated way. We are also working towards enhancing INDIGO so that file path information can be provided as contextual metadata without the need to upload files from their original folders. These enhancements will result in greater flexibility when building complex deposits with varied metadata requirements; it would also mean that a collection similar to the one described in this case study could be uploaded in a single deposit. This will still allow us to apply provenance actions and contextual metadata to each individual file, but without the need to load those objects separately, thus saving dozens of hours in staff resource time.

¶ 27Leave a comment on paragraph 27 0

Second, the NLNZ has looked closely at how preservation metadata is stored within the Rosetta system. Metadata in Rosetta largely conforms to the PREMIS model.17 In the course of implementing the Preconditioning Policy and creating provenance notes, which form part of a file’s core metadata, we concluded that the information we require in the provenance note to satisfy the Preconditioning Policy is more detailed than our current event metadata elements allow. As a result, the NLNZ has recommended that the Editorial Committee for PREMIS investigate a new provenance aspect of the data model so that our requirements can be more accurately satisfied.

2.7.2 Lessons Learned

¶ 28Leave a comment on paragraph 28 0

Inevitably, one gets to the end of a complex project such as this and wishes one had made different choices in some respects while feeling pleased with others. The following are several examples of lessons learned throughout the process of this case study. First, when the collection was initially received, the files were copied and stored in new folders. This was to facilitate the initial technical assessment and get a sense of the kinds of issues within the file set. This was strictly a working copy. However, when the files were transferred to the digital preservation analyst for preconditioning activities, it was this copy that was provided, rather than the original set of files in their original folder context. The implications of this decision became clear when the files had been “cleaned” and were being prepared for upload. With the preconditioned files now in a flat list, the “cleaned” files had to be re-integrated into their original folder structure prior to upload, which was a time-consuming exercise. This small error resulted in many extra hours of work.

¶ 29Leave a comment on paragraph 29 0

Second, the arrangement and description of the collection was done long before the technical analysis and preconditioning actions took place, which proved to be somewhat problematic. In this particular case, some files were described, but then following an in-depth technical analysis, we decided not to keep them. Thus, time was spent working on items that were not retained. Fortunately, this only occurred for a small number of files.

¶ 30Leave a comment on paragraph 30 0

Third, taking a disk inventory immediately upon receiving the media and capturing a detailed listing of how the files were arranged—including original file paths and dates—was extremely valuable. In this way, staff were able to confirm (or re-create) the original structure when files were accidentally moved around. Also, at each step of the ingest process, actions and decisions were carefully documented in an ingest report. This report proved very important in explaining and contextualizing actions and decisions to new staff members.

3. Conclusion

¶ 31Leave a comment on paragraph 31 0

As this case study illustrates, implementing archival and digital preservation policies to complex digital heritage collections can be difficult and labor intensive. It is clear that archival and digital preservation policies developed and implemented within institutions can have intended and unintended consequences on business processes and workflows. While concepts and rationales such as “all changes must be reversible” may be perfectly clear and logical in the abstract, they can be difficult to implement in reality. Principles such as original order that are well established and function for traditional, non-digital collections may be challenging to apply to digital collections. Tools and technology that work well for homogenous, modern digital collections might not cope well with varied, complex legacy collections.

¶ 32Leave a comment on paragraph 32 0

The relationship between policy and implementation is described very aptly in Born Digital: Guidance for Donors, Dealers, and Archival Repositories: “The unexpected will continue to challenge and surprise repositories acquiring and managing born-digital materials, despite reasonable efforts at creating clear and actionable policies.”18 Thus, heritage institutions should carefully consider how (or whether) traditional archival policies apply to digital collections, and how these policies can be applied in bulk. The collection illustrated here was a relatively small one, but the amount of effort required to process it was alarming. As digital collections become larger and more complex, we must identify ways that such archival policies can be applied so that they do not compromise efficiency.

¶ 33Leave a comment on paragraph 33 0

Ensuring that policies are regularly reviewed, enhancing tools to make workflows as efficient as possible, and speaking to donors of digital collections before they are donated may all contribute to more effective and streamlined processes. We must always be prepared to acknowledge that “[t]he stewardship of born-digital archival collections promises nothing if not routine encounters with the unexpected.”19

Joint Operations Group, Policy (JOGP), Department of Internal Affairs, 2012, “Digital content preconditioning policy,”pp. 1-2. This is an internal policy document, however it will be made available on request. Contact Peter.McKinney@dia.govt.nz to make a request. [↩]

Maria Guercio, “Principles, Methods, and Instruments for the Creation, Preservation, and Use of Archival Records in the Digital Environment,” American Archivist 64, no. 2 (Fall/Winter 2001): 238–69, 251. [↩]

Several different machines and operating systems were used during the media migration. These included Mac Performa 580CD (Mac OS 7.5.1); iMac (OS 9.2 / OS 10.1.2); HP Compaq (Windows XP); Toshiba 3.5-inch floppy-disk drive (USB FDD kit); and Iomega zip-disk drive. [↩]

The following tools were used to identify the files: DROID, TriD, Fido, Hex editor (Neo Hex), Reference Objects (trusted samples of particular file types for comparison), and Reference Standards (formal file format release papers that describe how a specific file type is made or implemented). [↩]

One such character, the “/”, had to be removed during the media migration process and prior to even storing the files on our secure server due to the fact that Windows does not support it as a file name character. [↩]

Tools used to rename files included Bulk Renaming Tool and Python (for generating scripts). [↩]

INDIGO is an internal submission tool the NLNZ developed to integrate with the digital preservation system. It is a desktop application used by various business units to create deposits of files and metadata and upload them to Rosetta. [↩]

In addition to being captured by storing the file’s original path in the object metadata, the original order was also retained and used during the arrangement and description process. Files were described according to their original order, as evidenced by their folder structure. [↩]

Several staff changes occurred during this period, including a sabbatical, a maternity leave, a staff resignation, and a staff secondment to another project. These resulted in delays over the years, as new staff members had to be brought up to speed on the collection and its complexities. [↩]

Gabriela Redwine, Megan Barnard, Kate Donovan, Erika Farr, Michael Forstrom, Will Hansen, Jeremy Leighton John, Nancy Kuhl, Seth Shaw, and Susan Thomas, Born Digital: Guidance for Donors, Dealers, and Archival Repositories, October 2013, MediaCommons Press, http://www.clir.org/pubs/reports/pub159/pub159.pdf, p.19. [↩]

Applying Theoretical Archival Principles and Policies to Actual Born-Digital Collections

By Leigh Rosin

November 2014

¶ 1 Leave a comment on paragraph 1 0 Few archivists would dispute the need to maintain archival integrity and adhere to the principles of provenance and original order when working with heritage collections. These principles are so well established and inherent that it has generally been assumed archivists would apply them to ALL formats in their collections, including those that are born digital. However, what happens when one tries to apply such traditional archival principles to actual born-digital collections? This article will outline the process, the challenges, and the lessons learned while applying the National Library of New Zealand’s archival and digital preservation policies on one particular unpublished, born-digital collection of theater and performing-arts papers. It is an excellent example of what happens when one attempts to apply traditional archival theory in the digital world. In this particular case, applying such principles enabled staff to identify how existing tools and processes could be improved, in addition to highlighting the complex and labor-intensive nature of documenting provenance and original order for born digital collections.

1. Introduction

1.1 The Organizational Context

¶ 2 Leave a comment on paragraph 2 0 Since the mid-1990s the National Library of New Zealand (NLNZ) has been actively acquiring born digital heritage collections, and in 2008 it went live with its National Digital Heritage Archive (NDHA). The NDHA encompasses several ingest and access delivery components and utilizes the Ex Libris digital preservation software, Rosetta, where collections are stored for long-term retention. Following an initial technical analysis by digital archivists, unpublished digital collections are appraised; if a decision is made to retain them, then they are arranged (if necessary) and described. The work is highly collaborative and often requires input from several stakeholder groups, including digital preservation analysts, policy analysts, curators, and arrangement and description librarians.

¶ 3 Leave a comment on paragraph 3 0 The NLNZ collects a variety of digital items, including unpublished materials (photographs, cartoons, private papers, and oral histories) and published materials (websites, electronic publications, and music). Digital items are collected under the National Library Act1 and acquired by purchase or donation. This paper will focus on unpublished collections.

1.2 The Policy Context

¶ 4 Leave a comment on paragraph 4 0 In 2011 the NLNZ, in collaboration with Archives New Zealand (ANZ), began the process of establishing policies to support its digital ingest and preservation activities. The one that had the greatest impact and relevance for ingest activities was the Preconditioning Policy, which states, “The diverse nature of digital content means that there are times when it is desirable to make changes to it before it is ingested into the preservation system. These changes are classed under the term ‘preconditioning.’”2 The policy’s goal is to “describe the limits of change that can be introduced to digital content from the time it is brought within the control of Archives or the Library to its acceptance into the preservation system.”3 These changes can include an alteration to (or the addition of) file extensions to better facilitate file format identification and/or the removal of unsupported characters in the file name; this enables more stable storage of the files in the preservation system’s storage database. Regardless of the change being imposed, one must be able to demonstrate that the action will not affect the intellectual content of the file. What is more, two key operating rules must be followed: “All changes must be reversible,” and all changes must have “a system-based provenance note that clearly describes the change that has been made to the file.”4

¶ 5 Leave a comment on paragraph 5 0 The business and technological context that made such a policy necessary for the NLNZ and ANZ is worth examining. All files ingested into the preservation system go through the Validation Stack (VS) in Rosetta.5 This is a series of technical checks run against all files, where those with missing or incorrect file extensions will fail the VS and be re-routed to a technical workbench area for assessment. Within this workbench, there are limits to the actions one can perform and the tools one can use. The NLNZ has found that for large collections requiring a complex series of fixes, it is more efficient to perform these actions prior to upload. Another consequence is that the files in the preservation system will be in a relatively stable and “clean” state, which the NLNZ and ANZ hope will make them easier to report on and identify later. Such preconditioning activities might also make future file format transformations for preservation easier and less labor intensive.

¶ 6 Leave a comment on paragraph 6 0 In addition to this digital preservation policy, the NLNZ also adheres to such well-established archival principles as “original order,”6 “provenance,” and “conservation.”7 The concept of original order—“[t]he order in which records and archives were kept when in active use”—is of particular relevance when processing digital collections.8 While there is no explicit policy statement that this principle must be applied to digital collections at the NLNZ, business procedures have naturally evolved to include it. Similarly, the concept of “authenticity” is relevant when processing digital collections and has influenced the NLNZ’s approach. Maria Guercio summarizes its relevance to digital collections: “The authenticity of a record, or rather the recognition that it has not been subject to manipulation, forgery, or substitution, entails guarantees of the maintenance of records across time and space (that is, their preservation and transmission) in terms of the provenance and integrity of records previously created.”9 All of these preservation and archival policies and concepts govern how the NLNZ ingests and manages digital collections. As the following case study will demonstrate, such policies have a significant impact on workflows, staff resourcing, and business processes.

2. Case Study: Processing a Collection of Theater-Company Records

¶ 7 Leave a comment on paragraph 7 0 In 2008, the NLNZ received a donation of records from a Wellington-based theater company. The collection was made up of seven boxes, covering the period 1990-2008. The donation included both digital and non-digital collection items and was made up of posters, scripts, production notes, photographs, publicity materials, and administrative records. The digital component of the collection was made up of fifty-five disks, which included Mac- and IBM-formatted 3.5-inch floppy disks, double density 3.5-inch floppy disks, and zip disks.

2.1 Media Analysis and Migration

¶ 8 Leave a comment on paragraph 8 0

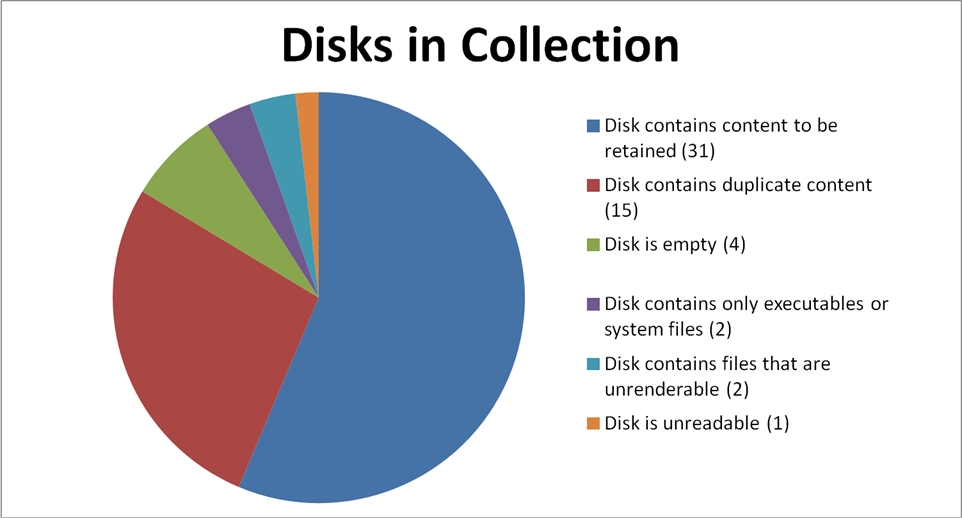

¶ 9 Leave a comment on paragraph 9 0 Table 1: Breakdown of the 55 disks received.

Table 1: Breakdown of the 55 disks received.

¶ 10 Leave a comment on paragraph 10 0

¶ 11 Leave a comment on paragraph 11 0 Once all fifty-five disks were analyzed, 923 of the 1,530 total files were migrated from the original media and put into temporary storage on a secure server to await further technical and curatorial analysis. In order to migrate the files from their original media, a number of different disk drives and operating systems were utilized.10

2.2 Technical Analysis of the Files

¶ 12 Leave a comment on paragraph 12 0 NLNZ’s digital preservation analyst then undertook a technical assessment of the files, using several tools to identify the file formats.11 This analysis revealed that while most files could be opened and read, they did have various technical issues. These included file extension problems (missing or incorrect file extensions) and file naming problems (illegal/undesirable characters or full stops in the file name).12 Of the 923 files being retained by the library, 700 (76 percent) had some kind of technical issue. The digital archivists, digital preservation analyst, and curator of the content began to plan how to address these problems. They identified a number of activities as preconditioning actions, to be performed before the files were ingested into Rosetta. Prior to conducting each preconditioning action on the original files, copies were tested to determine if the fix applied would affect the intellectual content held within the files and if it would be reversible, in accordance with the Preconditioning Policy.((A variety of methods were employed to ensure the intellectual content was preserved following preconditioning activities. These included running checksums and performing rendering checks (ensuring the file can be opened in the target application and behaves as expected).)) The technical assessment, in addition to revealing technical issues, also found that the files were arranged in a particular folder structure that the theater company had created, which grouped files by theater production, date, and record function.

2.3 Applying Preconditioning Actions

¶ 13 Leave a comment on paragraph 13 0 Copies of the 700 files that required preconditioning activities were passed to the digital preservation analyst for processing. A range of tools were used to add/change file extensions and remove/replace undesirable characters from the file name.13 A Python script was created to generate a detailed report indicating the original file name, the new file name, the original file path, and the change(s) applied to each file. Based on the preconditioning action applied, the script automatically generated a brief statement describing the action taken. At the NLNZ this statement is called a “provenance note” and forms part of a file’s core metadata. Provenance notes are created during the upload process using the NLNZ’s ingest tool, INDIGO.14

2.4 Preparing to Upload the Files: Policy Dependencies

¶ 14 Leave a comment on paragraph 14 0 As each preconditioning action must be reversible and well documented, each file must be uploaded with enough metadata to describe its preconditioning actions in such a way that they can be undone, should it prove necessary to re-create the file exactly as it was when it arrived at the library. Another policy dependency is an archival one concerning original order. The library received the files in a particular folder structure, which provided important archival context to the collection. While the files would not be stored within the database in their original order, it was determined that the original folder path of each file was a key piece of information that should be captured as part of its object metadata.15

¶ 15 Leave a comment on paragraph 15 0 In the interest of better reporting on preconditioning actions in the future, a policy decision was made that a different provenance note should be created for each preconditioning activity applied. In addition, a business rule was established identifying a syntax for provenance notes so that all notes are phrased in the same way. Each note begins with a standardized prefix, so that preservation staff will be able to easily report on (and retrieve) objects on which the same types of provenance activities were performed. For example:

¶ 16 Leave a comment on paragraph 16 0 Kieron doc. 5/5/92 [ORIGINAL file name]

¶ 17 Leave a comment on paragraph 17 0 Kieron doc-5_5_92.mcw [NEW file name]

¶ 18 Leave a comment on paragraph 18 0 This file was missing an extension and had unsupported characters in the file name. Three preconditioning actions were applied to it, requiring three distinct provenance notes:

2.5 Preparing to Upload the Files: Technological Dependencies

¶ 20 Leave a comment on paragraph 20 0 Even though INDIGO does not impose limits on how many files can be loaded in a single deposit, the ingest tool has several technological dependencies that affect how files can be uploaded:

2.6 Uploading the Files

¶ 22 Leave a comment on paragraph 22 0 Under the above technological and policy constraints, files were uploaded into Rosetta using INDIGO. A great deal of planning was required to ensure that this was done according to their original file path, as well as to the preconditioning action that was applied. Take, for example, a group of nine files from the \FRINGE\FRINGE FEST 93\PRODUCTION folder, as illustrated in Table 2:

¶ 23 Leave a comment on paragraph 23 0 Table 2: Analysis of the variety of preconditioning actions applied to a single folder of files and implications for uploading.

Table 2: Analysis of the variety of preconditioning actions applied to a single folder of files and implications for uploading.

¶ 24 Leave a comment on paragraph 24 0 Due to the variety of actions applied, this small group of nine files required five unique deposits. The loading progressed in this way, with the 923 files eventually requiring a total of 118 unique deposits. As a result, this relatively small collection took one staff member a total of 52 hours to upload into Rosetta.

2.7 Case-study Analysis

¶ 25 Leave a comment on paragraph 25 0 In addition to the policy and technological constraints that affected ingest and upload activities, there were several staff-related factors that, while not the focus of this paper, contributed to the time-consuming nature of this project.16 Ultimately, it took a total of five years to complete the processing of this collection: one to appraise; one to describe; two and a half to do disk migration, technical analysis, and preconditioning activities; and six months to upload the files and metadata.

2.7.1 Areas of Potential Enhancement

¶ 26 Leave a comment on paragraph 26 0 In processing this collection, the library identified a number of areas in which its tools, models, or processes could be enhanced to better support the tasks required. First, the NLNZ identified how its ingest tool could be enhanced to create greater efficiencies. We have recently re-developed INDIGO to apply provenance notes and descriptive metadata at a more granular level and in an automated way. We are also working towards enhancing INDIGO so that file path information can be provided as contextual metadata without the need to upload files from their original folders. These enhancements will result in greater flexibility when building complex deposits with varied metadata requirements; it would also mean that a collection similar to the one described in this case study could be uploaded in a single deposit. This will still allow us to apply provenance actions and contextual metadata to each individual file, but without the need to load those objects separately, thus saving dozens of hours in staff resource time.

¶ 27 Leave a comment on paragraph 27 0 Second, the NLNZ has looked closely at how preservation metadata is stored within the Rosetta system. Metadata in Rosetta largely conforms to the PREMIS model.17 In the course of implementing the Preconditioning Policy and creating provenance notes, which form part of a file’s core metadata, we concluded that the information we require in the provenance note to satisfy the Preconditioning Policy is more detailed than our current event metadata elements allow. As a result, the NLNZ has recommended that the Editorial Committee for PREMIS investigate a new provenance aspect of the data model so that our requirements can be more accurately satisfied.

2.7.2 Lessons Learned

¶ 28 Leave a comment on paragraph 28 0 Inevitably, one gets to the end of a complex project such as this and wishes one had made different choices in some respects while feeling pleased with others. The following are several examples of lessons learned throughout the process of this case study. First, when the collection was initially received, the files were copied and stored in new folders. This was to facilitate the initial technical assessment and get a sense of the kinds of issues within the file set. This was strictly a working copy. However, when the files were transferred to the digital preservation analyst for preconditioning activities, it was this copy that was provided, rather than the original set of files in their original folder context. The implications of this decision became clear when the files had been “cleaned” and were being prepared for upload. With the preconditioned files now in a flat list, the “cleaned” files had to be re-integrated into their original folder structure prior to upload, which was a time-consuming exercise. This small error resulted in many extra hours of work.

¶ 29 Leave a comment on paragraph 29 0 Second, the arrangement and description of the collection was done long before the technical analysis and preconditioning actions took place, which proved to be somewhat problematic. In this particular case, some files were described, but then following an in-depth technical analysis, we decided not to keep them. Thus, time was spent working on items that were not retained. Fortunately, this only occurred for a small number of files.

¶ 30 Leave a comment on paragraph 30 0 Third, taking a disk inventory immediately upon receiving the media and capturing a detailed listing of how the files were arranged—including original file paths and dates—was extremely valuable. In this way, staff were able to confirm (or re-create) the original structure when files were accidentally moved around. Also, at each step of the ingest process, actions and decisions were carefully documented in an ingest report. This report proved very important in explaining and contextualizing actions and decisions to new staff members.

3. Conclusion

¶ 31 Leave a comment on paragraph 31 0 As this case study illustrates, implementing archival and digital preservation policies to complex digital heritage collections can be difficult and labor intensive. It is clear that archival and digital preservation policies developed and implemented within institutions can have intended and unintended consequences on business processes and workflows. While concepts and rationales such as “all changes must be reversible” may be perfectly clear and logical in the abstract, they can be difficult to implement in reality. Principles such as original order that are well established and function for traditional, non-digital collections may be challenging to apply to digital collections. Tools and technology that work well for homogenous, modern digital collections might not cope well with varied, complex legacy collections.

¶ 32 Leave a comment on paragraph 32 0 The relationship between policy and implementation is described very aptly in Born Digital: Guidance for Donors, Dealers, and Archival Repositories: “The unexpected will continue to challenge and surprise repositories acquiring and managing born-digital materials, despite reasonable efforts at creating clear and actionable policies.”18 Thus, heritage institutions should carefully consider how (or whether) traditional archival policies apply to digital collections, and how these policies can be applied in bulk. The collection illustrated here was a relatively small one, but the amount of effort required to process it was alarming. As digital collections become larger and more complex, we must identify ways that such archival policies can be applied so that they do not compromise efficiency.

¶ 33 Leave a comment on paragraph 33 0 Ensuring that policies are regularly reviewed, enhancing tools to make workflows as efficient as possible, and speaking to donors of digital collections before they are donated may all contribute to more effective and streamlined processes. We must always be prepared to acknowledge that “[t]he stewardship of born-digital archival collections promises nothing if not routine encounters with the unexpected.”19

Leigh Rosin

Digital Archivist – National Library of New Zealand

Footnotes

License

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 United States License.

Share